When Does ChatGPT Actually Use the Web?

When Does ChatGPT Actually Use the Web?

Most AI visibility strategies rest on a false assumption

If a user asks ChatGPT a question, your content has a chance to shape the answer.

Our research shows that this is only true for a subset of queries — and knowing which ones those are is the foundation of effective Answer Engine Optimization (AEO).

At Salespeak, we set out to answer a more precise question:

When does ChatGPT decide it needs the web at all?

Because if the model doesn't retrieve, there is no ranking, no citation, and no opportunity to be discovered.

The Real Shift From Search to Answers

Traditional search engines always retrieve first and rank second.

Answer engines don't.

Modern language models can answer many questions directly from internal knowledge. Retrieval is optional — and increasingly selective.

This changes the optimization problem entirely.

AEO isn't about where you rank.

It's about whether you're even eligible to appear.

How We Studied Retrieval Behavior

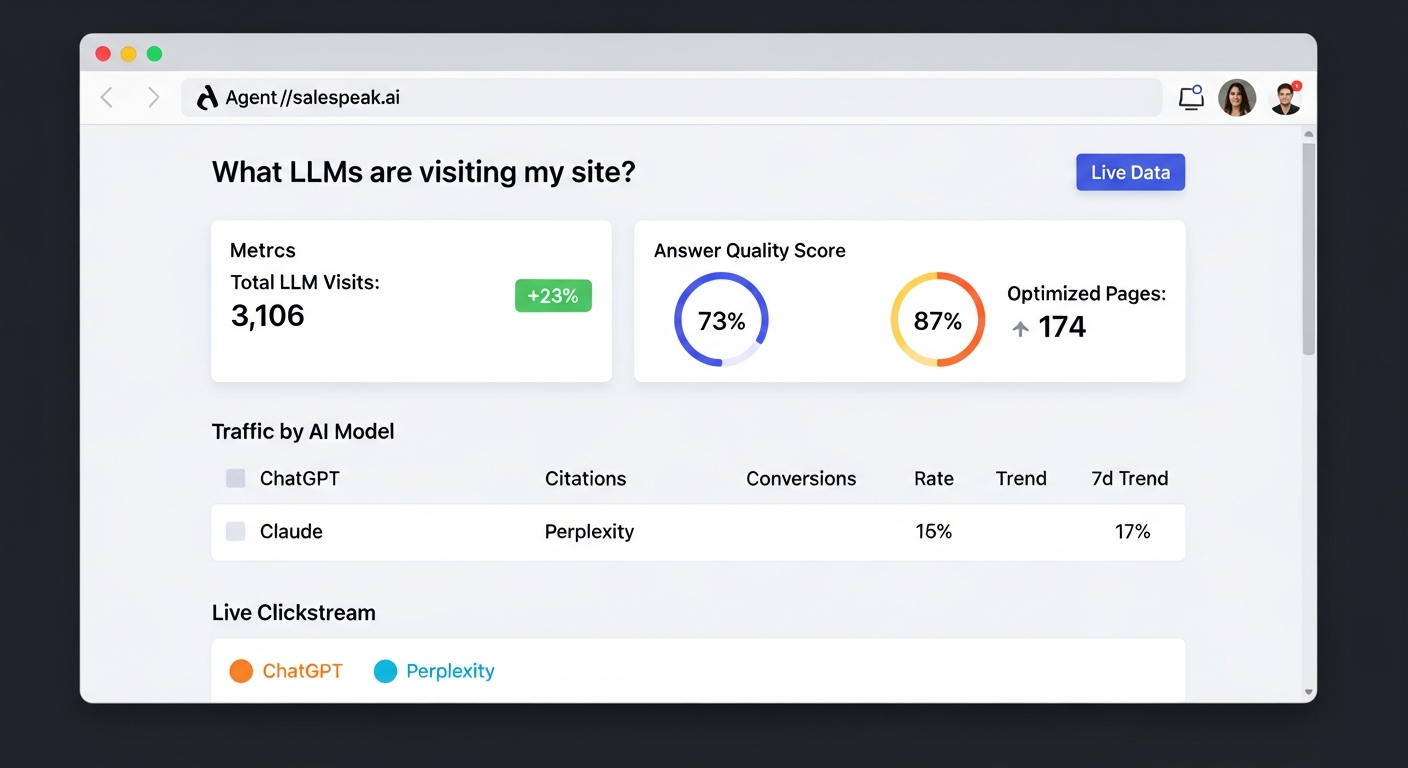

We analyzed hundreds of thousands of real ChatGPT conversations observed through the Salespeak platform to understand when the model invokes live web search versus answering from training alone.

Each prompt was classified into one of two intent categories:

- Research with intent to buy — comparisons, alternatives, "best X," pricing, evaluations

- Informational — explanations, definitions, how-tos, brainstorming, content generation, and general learning

Instead of focusing on citations or sources, we measured something more fundamental:

Did the model open the web, or not?

The Core Finding: Evaluation Triggers Retrieval

The difference between these two categories is stark.

Queries that signal research with intent to buy are multiple times more likely to trigger web retrieval than informational queries.

This isn't about query length or sophistication.

It's about risk.

When a user is evaluating a product, service, or vendor, the model faces immediate constraints:

- Markets change rapidly

- Features and pricing evolve

- New competitors appear

- Recommending incorrectly has real consequences

Answering purely from memory becomes dangerous.

So the model does the rational thing: it asks the web to confirm.

Why Informational Queries Usually Don't Trigger Search

Informational prompts — including generative tasks like writing, summarizing, or ideation — are still mostly handled internally.

If the answer is:

- Stable

- Low-risk

- Non-competitive

- Unlikely to change week-to-week

Retrieval often isn't necessary.

From the model's perspective, opening the web is a cost — not just financially, but architecturally. It's only used when uncertainty crosses a threshold.

That's why, today, AEO opportunities are disproportionately concentrated in research-stage queries.

Reframing "Opportunity" in AEO

One way to think about this is arena size.

Research-with-intent queries create a large arena — the model actively looks outward.

Informational queries create a much smaller one — the model usually answers alone.

Outside that arena, no amount of optimization helps. Your content never enters the conversation.

This explains why many early AEO wins feel "commercial." Not because answer engines favor brands — but because evaluation creates uncertainty, and uncertainty forces retrieval.

Why the Informational Gap Won't Hold

The most important insight from our research isn't where retrieval is high.

It's where it's still low.

Model providers are under pressure to:

- Reduce inference costs

- Rely less on massive parameter counts

- Shift work from reasoning to retrieval

Web search is cheap. Inference is expensive.

The obvious architectural direction is smaller models paired with heavier retrieval, where the web does more of the work and the model orchestrates synthesis.

When that happens, informational queries — especially explanatory and educational ones — become far more likely to trigger search.

If that sounds familiar, it should. We're already seeing early versions of this shift across AI-powered search experiences.

What This Means for Your AEO Strategy

If you're optimizing for AI visibility in 2026, here's the framework:

- Prioritize evaluation-stage content now. These queries already trigger retrieval at high rates. Product comparisons, alternatives pages, and buying guides are where citations happen today.

- Build informational content for tomorrow. As retrieval expands, your explanatory and educational content becomes eligible. The investment pays off when the architecture shifts.

- Stop thinking about ranking. The first question is whether the model searches at all. Structure your content to be retrievable, not just rankable.

- Monitor retrieval patterns. The threshold for when models open the web is moving. What's answered internally today may trigger search next quarter.

The companies that understand this distinction — between content that can appear and content that will never be considered — are the ones building durable AI visibility.

Everyone else is optimizing for an opportunity that doesn't exist.